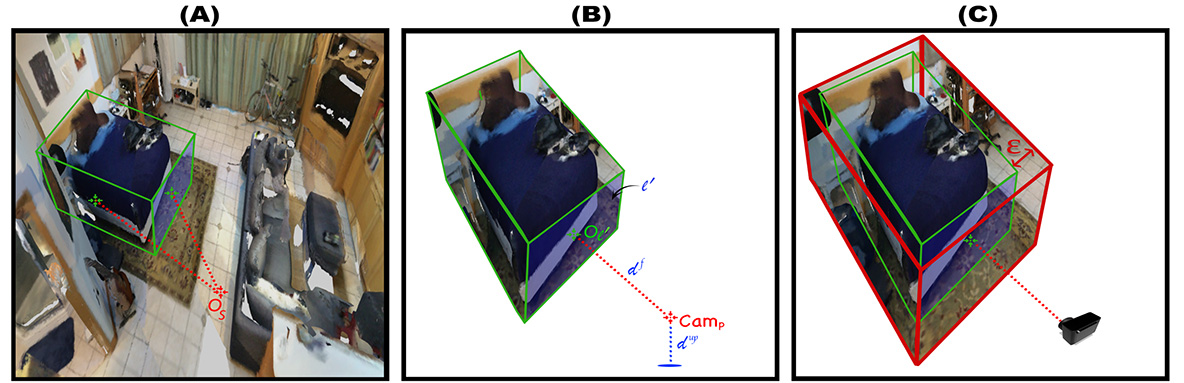

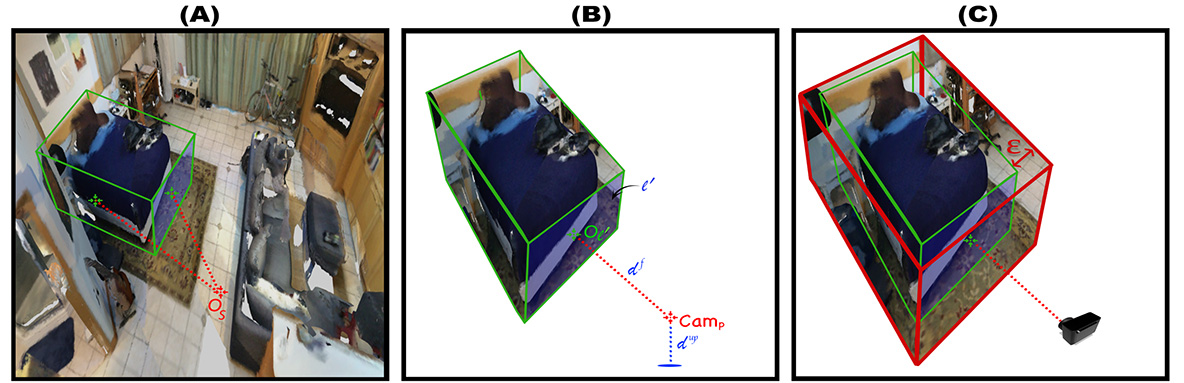

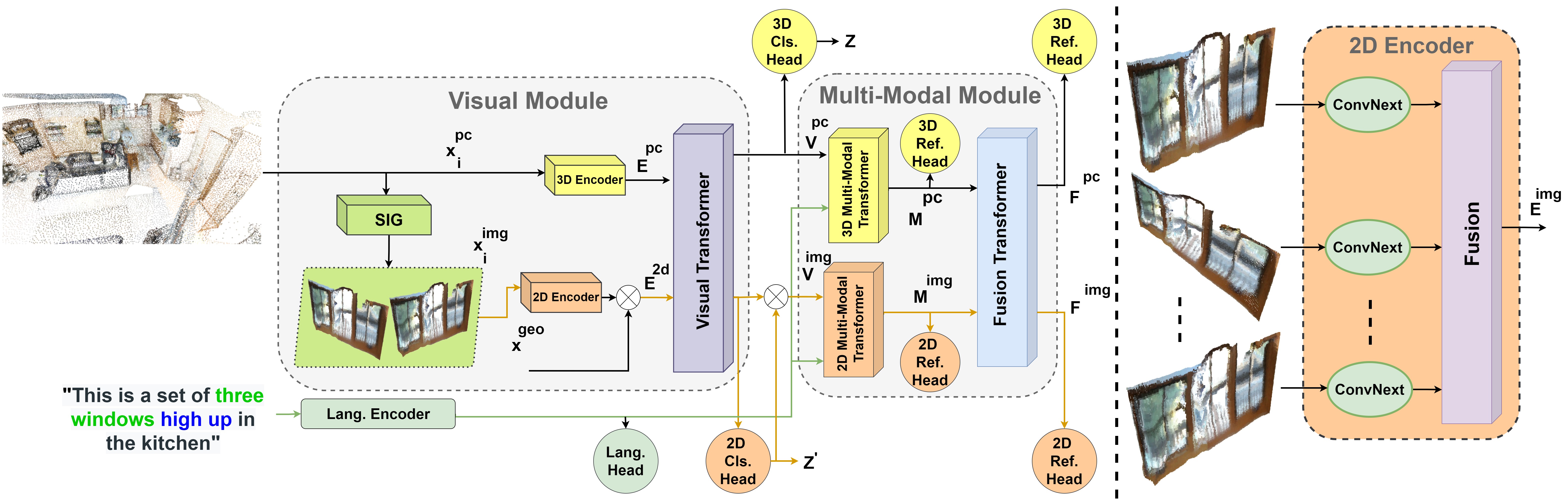

Look Around: 2D Synthetic Images Generator ( SIG )

The 3D visual grounding task has been explored with visual and language streams to comprehend referential language to identify targeted objects in 3D scenes. However, most existing methods devote the visual stream to capturing the 3D visual clues using off-the-shelf point clouds encoders. The main question we address in this paper is “can we consolidate the 3D visual stream by 2D clues synthesized from point clouds and efficiently utilize them in training and testing?”. The main idea is to assist the 3D encoder by incorporating rich 2D object representations without requiring extra 2D inputs. To this end, we leverage 2D clues, synthetically generated from 3D point clouds, and empirically show their aptitude to boost the quality of the learned visual representations. We validate our approach through comprehensive experiments on Nr3D, Sr3D, and ScanRefer datasets, and we show consistent performance gains compared to the state-of-the-art. Our proposed module, dubbed as Look Around and Refer (LAR), significantly outperforms the state-of-the-art 3D visual grounding techniques on these three benchmarks

@inproceedings{bakrlook,

title={Look Around and Refer: 2D Synthetic Semantics Knowledge Distillation for 3D Visual Grounding},

author={Bakr, Eslam Mohamed and Alsaedy, Yasmeen Youssef and Elhoseiny, Mohamed},

booktitle={Advances in Neural Information Processing Systems}

}